Bringing it All Together 4/19

On the urging of Rob, I looked at Root Mean Square (RMS) for determining onsets and offsets. RMS does and excellent job of identifying note onsets and offsets if they are completely disjunct. If two notes are played without space in between the RMS does not find it because RMS just looks at amplitude of the incoming signal. I am going to compensate for this by checking for note onsets using Spectral Flux and a combination of spectral flux and RMS to find offsets. I have to experiment a lot with the values of what is an acceptable limit to call turning on. But that is a lot of tuning and just trial and error.

Now it is time to combine both parts, the pitch detection and onset detection. I haven't finished implementing it yet but I outlined some psuedo code to make sure I understood the project flow.

findOnsetPitch(buffer){

run entire onset_detection routine

var noteOn = false

Iterate through array of flux peaks {

if(value != 0 && note){

pass next 1024 samples to pitch_detection

create new Note instance (startSamples, pitch in Hz returned from pitch_detect)

set NoteOn to true

} else if(value != 0 && noteOn == true){

if(current RMs value < .3){

noteOn = false

add where note stopped to the last created Note instance

} else {

add where note stopped to last Note instance

pass next 1024 samples to pitch_detect

create new Note instance (startSamples, pitch in Hz returned from pitch_detect)

}

} else if(current RMS value < .3){

noteOn = false

add where note stopped to last Note instance

}

}

}

The Note instance is a new class I created to handle each note. It will contain where a note starts, where it stops, and it's pitch. It is to help contain all the information I need for a single note. I started putting together the combined function, but it is very Frankenstein's Monster right now. I will continue to work on it this week. I don't have high hopes for it working right the first time at all.

Adding Onset Detection with Spectral Flux 4/12

An update to last week, turns out the issue with my code had nothing to do with pass by reference. The issue, which is quite blaringly obvious now, is that when trying to get the last element of the array I called the array currentNoteArray not currNoteArray. So the functionality of pitch detection now works properly and will save each new pitch that was found.

This week I started looking at

this onset detection. This method uses

spectral flux. Spectral flux is a measure of how quicly the power spectrum of a signal is changing. The power is found by performing a

fast fourier transform and then summing the total value of the signal.

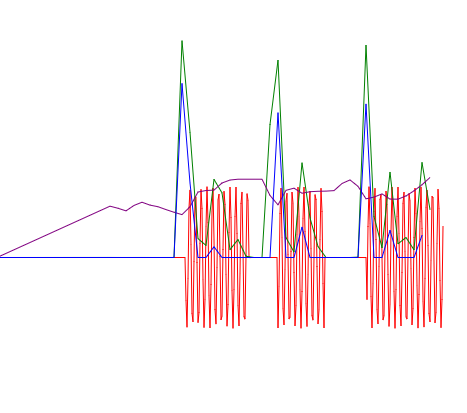

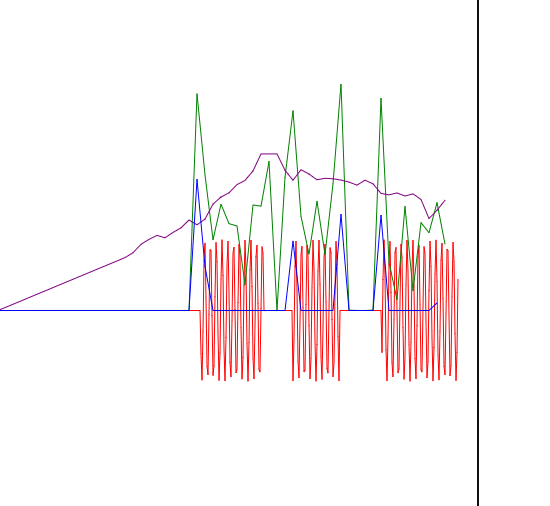

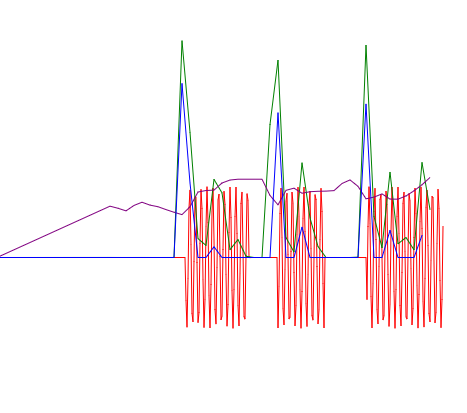

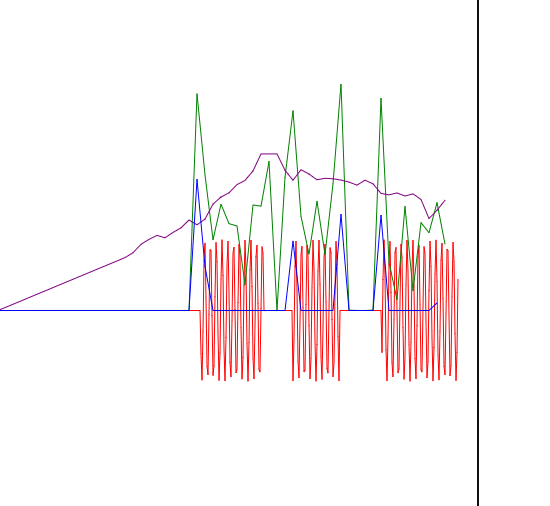

The method that was implemented by default only summed increases in the energy from the previous bin. The application provides a lovely visualization. This is the visualization with the current method for 3 sine wave blips.

The red line is the input signal. The green line is the spectral flux. The purple line is the activation threshold determined by the local average of the signal. The blue line indicates where the spectral flux exceeded the threshold value. As you can see, it only activates on the onset of the note not when it ends. My thought was to change the inclusion of only increases to both increases and decreases by using absolute value.

This method is able to capture the onset and offset of notes. Well maybe not notes but definitely sine waves. There is some error with the middle of the notes, but that was present in the original method so I will have to dive into the algorithm further. I have not implemented this into my tool displayed on the website. I have been playing with this offline.

Adding Pitch Detection and I don't know Javascript 4/5

So first step of the new project was getting pitch detection working. This was simple enough, I just had to add the the module I mentioned in last week's post into my webpage. This went swimmingly besides issues with CSS, which I chose not to address because that is not the point of this class. So I got that working with all three forms of audio, live input, recorded sample, and an oscilltor.

Later on in the week I tried to make the program to track each new pitch that was made and hold onto it to display it when the recording was done. This kind of worked, but also didn't work at all. I am fairly certain the path I am taking is right, but I think fundamentally I am doing the wrong thing. I believe the issue is arrising from an error with pass by reference vs pass by value. Currently I have written:

var note = noteFromPitch( pitch );

if (currNoteArray.length == 0) {

currNoteArray.push(note);

} else if (currNoteArray[currentNoteArray.length - 1] != note){

currNoteArray.push(note);

}

Pitch is a frequency and note is a midi note value. Hypothetically, this code should add the value of the midi note value to the end of my note array if it is the first one or if it is different from the last note added to the array. I think what might be happening, is an error with passing by reference. I would like currNoteArray to store just the value of note, but I think it is storing the address location which is messing up displaying the note later on in the program. I tried googling a solution to it, but I think I can't find a solution because I am thinking in a c++ mindset and not a scripting mindset. I was going to get Rob's opinion because I can't seem to get it to work.

The Essential to My Project in Web Audio 3/29

So now that I have web audio working on my website, the future now includes inplementing my project idea as a web app. The essential componnents are pitch identification, onset identification (for the start of notes and their finishes), and some way to record the information and convert it to some form of common format (probably music xml).

I have found some code for a potential

pitch detection software on github. I'm not sure if there is a way to include this as a module or if I just copy the code over. The options for onset detection are

Web audio specific one or

this generic javascript one. The web audio specific one looks like it is too complicated for my needs, but it is already setup to work with web audio. The generic javascript one looks like it is more appropriately scoped, but I would have to do all the web audio setup myself. This week I will try to get the pitch detection working as I think that will be quicker, but I will move on to onset detection shortly after.

I will do all the transfering to musicxml data type by myself unless there is a super easy module to do it for me.

Web Audio Start 3/22

I have created another tab on my website for the work I am going to be doing with Web Audio. As of today I have it playing some lovely royalty free music. I followed

this tutorial for setting up this very basic playback. This took me about an hour and a half (less if I would stop spelling things wrong ¯\_(ツ)_/¯). From here I am going to look into how to including libraries/packages in web audio. I assume it integrates with the javascript, but this is a whole new world for me.

Midterm Presentation Feedback 3/20

I presented my progress on March 2nd and it performed as I expected it to. Unfortunately my expectations were not for it to identify pitch. Currently onset detection works perfectly, but pitch detection is not. This is an essential component of my project so Rob recommended that I pivot. This was not wasted time, as this is a part of an design/iteration process. Rob suggested looking into possibly using Web Audio, as a lot of people are currently working on it (so I can find help online) and it can then run as a web application. Another suggestion was to look closer into The Synthesis Toolkit (STK), as it might have pitch detection buried in it somewhere.

On thing I mention working on next was adding midi playback to my software. A suggestion was to focus first on making sure that the software has a common export file type from it, like music xml, so that music notation software can import the file and use it. This would encourage people to use the software as there would be a way to get their ideas out of it.

My next steps will be to look into web audio. I didn't do work on anything over spring break so now I am going to jump back into it.

Why won't you work???

Aubio has several basic structure types that handles all the identification. And I have figured out how they work! Well really just the one that identifies the pitch. So far I feel like the only two structures I will need to use are the pitch and onset identifications. I can not get the detection structure to work. It is not properly identifying when a note starts. It says if it detects there is a note it will return a noted between 1 and 2 to give the relative distance along the input hopper. Right now it is returning the number 4 on the first input hopper. I assume I need to play with some of the thresholds, like silence and peak threshold. I will be seaking help from Rob to find the issue.

A Hello World Start... Almost

Aubio has been much more difficult to work with than I though it would be. It has all it's own types, and data sturctures. I thought I was close to getting basic pitch detection working, but I can't figure out what to do with the values I get. I also realized last Thursday that Aubio has no midi playback, or any sort of playback whatsoever. So I will have to use a different package for that. Fortunately, when I was first looking around I found a C++ package called The Synthesis Toolkit. It is all based around midi sounds, and has a lot of instrument options. Currently, I am just trying to work with the software package and having a rough time. I wasn't able to get as much done as I would have like because I went away for the 3-day weekend, I am ready to make this work.

A New Library

After doing a lot of looking I will be using the library

Aubio for this project. It has all the tools I need from pitch recognition and beat tracking. The only catch is it is a library for c and python. I know that you can compile c using c++ so I will just have to do a bit more work to get it to work. It should be fine overall.

Week of 1/27: My Project Concept

My project I will be working on for the semester will be slightly different then the stuff I have been exploring. The idea for my project comes from the album "Voice Notes" by Charlie Puth. He titled the album as such because if he had an idea for a song, melody, or lyrics, he would sing it into the voicenotes app on his iPhone. So I thought, what if there was a more fleshed out version of that. An app for musicians to sing or play ideas into an app on their phone for later. I am still deciding on the full capability of the application. I would like to have the app identify the pitches and transcribe it into a rhythmic schema identified by the user and then allow for midi, or original playback. The midi playback would allow for the user to record in multiple lines and then specify instruments and see how the sound together on the fly.

I have decided to work in c++, and I will be putting all my coding work into this

github repo so that anyone can see what I am working on. I do not have a good name for the app yet, so if anyone has any suggestions that would be cool. I am still deciding on what audio package to use, right now I am deciding between SuperPowered SDK and The Synthesis Tookit.

Week of 1/20: Computer Music Research

This week I looked over some papers that caguth my eye were a paper about

Coding with a Piano. I was able to find a video of one of the demonstrations of it. The idea is very interesting, and the fact that our classroom has a midi piano in it makes me think that there is potential for pursuing something along these lines. I also found this paper on

Using Video Games as a method of Improvization which got me thinking about designing an instrument around the use of a controller. The joysticks make me think of a way to a fun synth modulator. I would have to think further on it.

Beyond the pure coding aspects the idea of building a physical electronic instrument interests me greatly.

The Modulin made by Martin Molin really fascinates me. The instrument takes its name from a modular syntesizer played sort of like a violin. I find systems like this incredibly fascinating.

In response to augmented instruments discussed in class on 1/23,

A guitar with built in audio effects.

Week of 1/13: A Beginning

First post for the class. In class on Monday I mentioned that I was thinking about doing something related to modifying live perfomance, and said the name Jacob Collier. One of his interesting live performances was a for a

TED Talk in which he loops in quite astounding ways. Another aspect of his live performance is the use of a live prismizer sound effect. He has one of his keyboards running a version of the Prismizer vocal effect or vocoder. Manipulating a live performance in this way has alway been very interesting to me.